Circle Attention: Forecasting Network Traffic by Learning Interpretable Spatial Relationships from Intersecting Circles (2023)

ECML-PKDD 2023

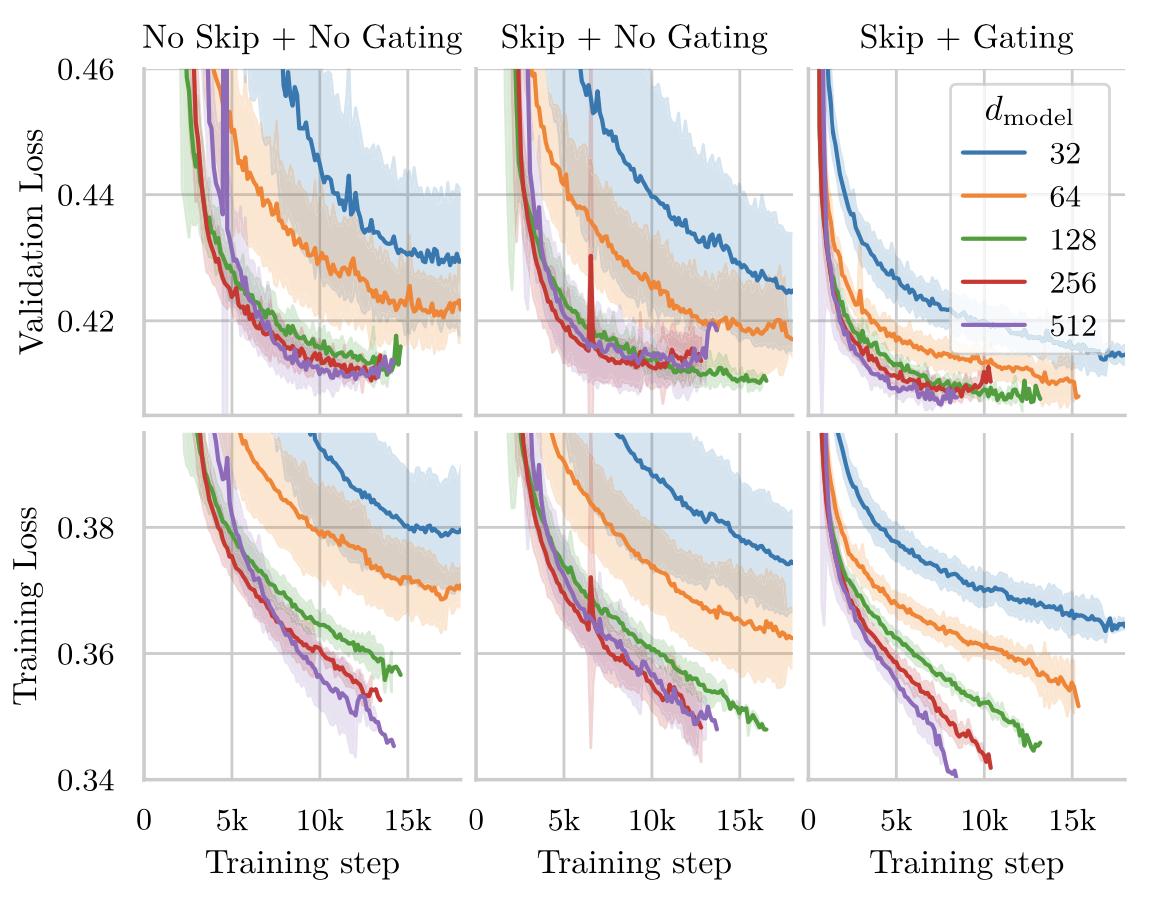

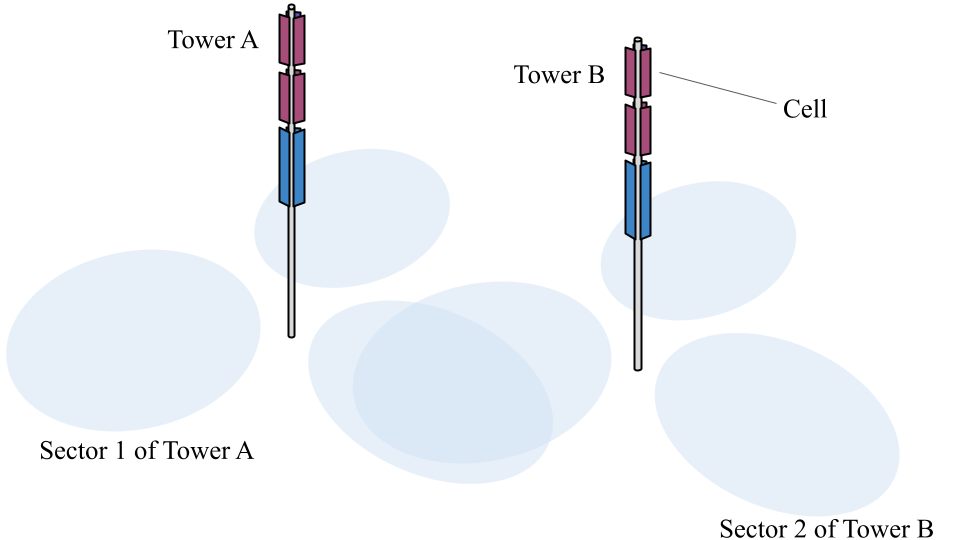

Abstract Accurately forecasting traffic in telecommunication networks is essential for operators to efficiently allocate resources, provide better services, and save energy. We propose Circle Attention, a novel spatial attention mechanism for telecom traffic forecasting, which directly models the area of effect of neighboring cell towers. Cell towers typically point in three different geographical directions, called sectors. Circle Attention models the relationships between sectors of neighboring cell towers by assigning a circle with learnable parameters to each sector, which are: the azimuth of the sector, the distance from the cell tower to the center of the circle, and the radius of the circle.